Du 16 au 20 juin 2025, la deuxième édition des Mouettes Savantes a réuni 30 élèves de seconde issues de lycées de Brest, Rennes et du sud de Paris.

Au programme de ce projet scientifique conçu et organisé par cinq chercheuses et enseignantes-chercheuses, dont Marie Etienne (ENSAI-CREST) : découverte de la recherche en mathématique au service des transitions environnementales, à la station biologique de l’Université de Rennes, en forêt de Paimpont.

Bilan carbone de l’IA générative, alimentation, évolution des températures, moustique tigre et pêche durable : les matinées ont été consacrées à des ateliers scientifiques mobilisant des compétences en mathématiques et informatique, appliquées à des enjeux environnementaux actuels. L’après-midi, place aux activités sportives, ludiques et culturelles au cœur de la forêt de Brocéliande.

Conclusion ? Un franc succès pour ce séjour dont l’objectif est de renforcer la présence des jeunes filles dans les filières et carrières scientifiques.

Marie Etienne a présenté le projet Les Mouettes Savantes lors des Journées Parité de la communauté mathématique 2025 qui se se sont tenues les 23 et 24 juin à l’Université de Rennes, campus Beaulieu.

Ouest France a couvert la dernière édition des Mouettes Savantes dans l’édition du 21 juin 2025.

En savoir plus sur le projet Les Mouettes Savantes

Arnak Dalalyan awarded an ERC Advanced Grant for his work on generative AI models

We are proud to announce that Arnak Dalalyan, director of CREST, Hi! PARIS Fellow and Professor of statistics at ENSAE Paris, has been awarded a European Research Council (ERC) Advanced Grant for his project SAGMOS – Statistical Analysis of Generative Models: Sampling Guarantees and Robustness. This highly competitive grant will support his ambitious research at the intersection of statistics, machine learning, and artificial intelligence.

With this new grant, the CREST now hosts eight ongoing ERC projects: three ERC Starting Grants, three ERC Consolidator Grants, and now two ERC Advanced Grants. This remarkable track record reflects the strength, diversity, and international visibility of fundamental and applied research at the lab. Arnak Dalalyan joins a long line of CREST researchers distinguished by the ERC, following for example the recent ERC Consolidator Grant obtained by Yves Le Yaouanq in economics.

About Arnak Dalalyan

A mathematician and statistician, Arnak Dalalyan studied at Yerevan State University (Armenia) before obtaining a PhD in statistics from Le Mans University in 2001, and an HDR from Sorbonne University (formerly Université Pierre et Marie Curie) in 2007. After postdoctoral research at Humboldt University in Berlin and several academic positions in France, he joined ENSAE Paris and CREST, where he has been director since 2020. His research focuses on mathematical statistics and its applications to machine learning, with particular emphasis on robust methods, high-dimensional data, and sampling techniques.

SAGMOS – Statistical Analysis of Generative Models: Sampling Guarantees and Robustness

Generative models — algorithms capable of creating realistic texts, images, music, or molecular structures — are now at the heart of many technological innovations, from artistic creation to drug discovery. The SAGMOS project will provide new mathematical guarantees on the reliability, originality, and efficiency of such models, particularly diffusion models that have become the new standard in the field.

“In recent years, generative models have made remarkable progress. But we still need to understand how much data is required for these models to generate reliable and truly novel outputs. The SAGMOS project aims to bridge this gap by providing precise theoretical guarantees,” explains Arnak Dalalyan.

The project will lead to the recruitment of PhD students and postdoctoral researchers, and to the organisation of international workshops to disseminate its findings.

The ERC Advanced Grant

The ERC Advanced Grant is one of the most prestigious funding schemes in Europe, supporting established researchers with a track record of significant research achievements. The grant allows recipients to pursue ground-breaking, high-risk projects that can lead to major scientific advances. The funding awarded to Arnak Dalalyan reflects the European commitment to frontier research and the scientific excellence of the CREST research centre.

Beyond the PhD – CREST Series #3: Insights from PhD Supervisors

At CREST, we aim to showcase the diverse career trajectories and perspectives of researchers who have navigated the path beyond their PhD. The “Beyond the PhD” series provides an opportunity to hear directly from experts across different fields about their experiences, challenges, and insights on life as a researcher.

For this third installment, we are featuring four distinguished researchers: Benoit Schmutz (Ecole polytechnique, IP Paris), Caroline Hillairet (GENES, ENSAE Paris, IP Paris), Paola Tubaro (CNRS, ENSAE Paris, IP Paris), and Nicolas Chopin (GENES, ENSAE Paris, IP Paris). Each of them shares their thoughts on the PhD journey, its impact on their careers, and the broader role of research in society.

Why pursue a PhD at CREST?

A PhD is much more than just academic training—it’s about developing a mindset that allows you to explore complex questions, think critically, and push the boundaries of knowledge. Our researchers highlight three key aspects that define the PhD experience at CREST:

🔹 An Environment for Intellectual Growth – A PhD is a journey of constant learning. You will develop a structured way of thinking, gain expertise in your field, and learn how to navigate uncertainty in research.

🔹 Opportunities for Interdisciplinary Work – At CREST, you will interact with researchers across economics, sociology, finance, and statistics, allowing for a dynamic and stimulating research experience.

🔹 A Supportive and Collaborative Research Culture – While research can be challenging, you will not be alone. Engaging with peers, supervisors, and the broader academic community is essential, and at CREST, we value collaboration as much as independence.

Watch the introduction to this series below.

Meet the Researchers

Each researcher featured in this edition brings a unique perspective on the PhD experience and beyond.

Benoit Schmutz – From PhD to Applied Economics

Benoit discusses how a PhD equips students with essential analytical skills, particularly in understanding labor markets and urban economics. He emphasizes the importance of rigor and adaptability in research.

🎥 Watch Benoit’s insights

Caroline Hillairet – Mathematics, Finance, and Research Opportunities

Caroline shares her journey from mathematics to finance, highlighting how a PhD opens doors to interdisciplinary research and the application of theoretical models to real-world problems.

🎥 Watch Caroline’s insights

Paola Tubaro – Networks, Society, and the Role of Research

Paola discusses how her research in computational social sciences evolved, emphasizing the collaborative nature of research and the impact of digital transformations on society.

🎥 Watch Paola’s insights

Nicolas Chopin – The Art of Asking the Right Questions

Nicolas talks about the role of uncertainty in research, how a PhD teaches resilience, and the impact of Bayesian statistics in various domains, including machine learning.

🎥 Watch Nicolas’s insights

Advice for Future PhD Students

If you are considering applying for a PhD at CREST, here are a few key takeaways from our researchers:

✅ Stay curious – Research is about exploration, so embrace new ideas and unexpected results.

✅ Engage with the academic community – Attend conferences, collaborate with other researchers, and actively participate in lab activities.

✅ Be patient and persistent – Progress in research takes time. Learning how to overcome obstacles is part of the PhD experience.

✅ Think beyond the thesis – Your PhD is not just about writing a dissertation; it’s about developing a way of thinking that will shape your career.

Check out our two previous series of Beyond the PhD on the CREST YouTube Channel:

Beyond the PhD – Series 1 – a video centered on the International Job Market from Economics PhD students.

Beyond the PhD – Series 2 – a series of videos from our PhD students on the definition of what is a PhD.

CREST at the IP Paris IA Action Summit: Exploring AI for Economic Forecasting and Algorithmic Optimization

On February 6, 2025, Institut Polytechnique de Paris hosted the IA Action Summit at École polytechnique, a high-level event bringing together leading researchers, industry experts, and policymakers to discuss the transformative role of artificial intelligence. Among the distinguished participants, Anna Simoni and Vianney Perchet, researchers at CREST, presented their latest work on AI applications in macroeconomic forecasting and algorithmic optimization, respectively.

Macroeconomic Nowcasting with AI and Alternative Data – Anna Simoni

Anna Simoni (CNRS, ENSAE Paris, École polytechnique, Hi!Paris Fellow), presented her work on integrating artificial intelligence and alternative data to improve macroeconomic nowcasting. She addressed the challenge of forecasting key economic indicators such as GDP growth, inflation, and financial cycles in real time—an essential task for policymakers, financial institutions, and businesses.

Her research highlights the potential of AI to handle large, complex, and mixed-frequency datasets from both traditional (e.g., national statistics, financial markets) and non-traditional sources (e.g., Google search data, satellite imagery, mobile phone traffic, credit card transactions). By applying machine learning techniques, including dynamic factor models, neural networks, and Bayesian methods, her work demonstrates how AI-driven models can enhance nowcasting accuracy, detect economic shifts early, and provide better insights into economic trends.

Through real-world applications, including an analysis of Google search data for GDP nowcasting, her findings reveal that AI methods can significantly improve short-term economic predictions, especially in periods of high uncertainty or economic recessions. However, she also emphasized that AI models must be carefully designed to ensure interpretability and reliability, as pure data-driven approaches do not always outperform traditional methods.

AI-Powered AI: Enhancing Algorithmic Decision-Making – Vianney Perchet

Vianney Perchet, (GENES, Hi!Paris Fellow), introduced the concept of AI-powered AI, where artificial intelligence assists and improves algorithmic decision-making in various fields, from optimization to reinforcement learning and recommender systems.

He explored how AI-oracles—AI systems that provide tentative solutions to computational tasks—can be leveraged to accelerate decision-making processes. Through examples like binary search with AI hints, he demonstrated the trade-offs between consistency and robustness: algorithms must be able to trust AI-generated predictions when they are accurate but remain resilient when faced with imperfect or misleading information.

Perchet highlighted several fundamental challenges in AI-assisted algorithms, including:

- Strategic AI usage: AI-oracle calls can be costly, requiring efficient selection mechanisms.

- Combination of multiple AI sources: Different AI models may offer varying predictions, requiring aggregation methods to improve reliability.

- Incentivization: AI models may have their own objectives, necessitating mechanisms to align them with user needs.

- Learning from structure: AI-assisted algorithms must adapt and refine predictions over time for better long-term performance.

- Beyond worst-case scenarios: Moving beyond rigid worst-case analyses toward realistic, instance-dependent performance guarantees.

His research opens exciting new perspectives for integrating AI into optimization problems, online learning, and algorithmic decision-making, with applications ranging from robotics and computer vision to financial forecasting and recommender systems.

CREST’s Commitment to AI Research

CREST’s involvement in the IP Paris IA Action Summit, through the contributions of Anna Simoni and Vianney Perchet,demonstrates the lab’s strong engagement in artificial intelligence research applied to economics, forecasting, and decision-making. Their work aligns with ongoing research at CREST on machine learning, reinforcement learning, and statistical AI methods, led by scholars such as Nicolas Chopin, Paola Tubaro, Bruno Crépon, and Olivier Gossner.

As AI continues to transform economic modeling and optimization, CREST continues its research of exploring how AI can enhance forecasting, decision processes, and strategic learning in uncertain environments.

Welcome to the New Researchers Joining CREST in 2025

CREST is excited to welcome three new researchers who arrived in January 2025. Each brings unique expertise and a strong academic background, further strengthening our multidisciplinary research environment.

Jean-François Chassagneux

Position: Full Professor in Mathematics (ENSAE Paris)

Research Interests: : Mathematical finance & numerical probability: partial hedging and non-linear pricing, quantitative methods for transition risks and carbon markets, switching problems and reflected BSDEs, numerical methods for mean-field systems.

Previous Position: Full Professor in Applied Mathematics, Université Paris Cité & LPSM

Learn More: Jean-François Chassagneux’s personal webpage

Javier Gonzalez-Delgado

Position: Assistant Professor in Statistics (ENSAI)

Research Interests: Selective inference, hypothesis testing, clustering, and statistical methods for real-world problems in biology, particularly structural biology and genetics.

Previous Position: Postdoctoral researcher, Human Genetics, McGill University

Learn More: Javier Gonzalez-Delgado’s personal webpage

“After a period away from here, I am looking forward to reconnect with the French research community and develop new connections with scientists at CREST!”

Mohammadreza Mousavi Kalan

Position: Assistant Professor in Statistics (ENSAI)

Research Interests: Statistical machine learning, Transfer learning, Domain adaptation, Outlier detection, Optimization Theory, Distrusted Computing.

Previous Position: Postdoctoral researcher, Columbia University

“I am excited to join CREST as an Assistant Professor. CREST’s excellent research reputation makes it the perfect place to continue my academic journey. With renowned researchers at CREST, this opens up amazing opportunities for meaningful collaborations that will let me contribute to and grow with this vibrant community. I look forward to impactful research and close collaboration with such inspiring colleagues.”

We are delighted to welcome Jean-François, Javier, and Mohammadreza to the CREST. Their expertise and dedication to advancing research will undoubtedly contribute to the lab’s excellence. Stay tuned for updates on their research and collaborations!

2024 CREST Highlights

As 2024 draws to a close, CREST reflects on a year filled with groundbreaking research, prestigious awards, and impactful initiatives. Here’s a look back at our key achievements.

📊 Research Breakthroughs: 93 Articles Published

CREST published 93 articles so far, with 62% appearing in Q1 journals. These works reflect the breadth and depth of research conducted across CREST’s clusters. Here are some highlights:

Information Technology and Returns to Scale by Danial Lashkari, Arthur Bauer, and Jocely Boussard explores how technological advancements influence economies of scale, shedding light on contemporary production practices, in American Economic Review

Locus of Control and the Preference for Agency by Marco Caliendo, Deborah Cobb-Clark, Juliana Silva-Goncalves, and Arne Uhlendorff investigates how personal traits shape individuals’ economic decisions, providing a deeper understanding of agency in economic behavior, in European Economic Review.

Global Mobile Inventors by Dany Bahar, Prithwiraj Choudhury, Ernest Miguelez, and Sara Signorelli examines the migration patterns of innovative talent worldwide, offering new perspectives on innovation dynamics, in Journal of Development Economics.

Testing and Relaxing the Exclusion Restriction in the Control Function Approach by Xavier D’Haultfoeuille, Stefan Hordelein, and Yuya Sasaki provides advanced methodologies to enhance econometric analysis, in Journal of Econometrics.

Are Economists’ Preferences Psychologists’ Personality Traits? A Structural Approach by Tomas Jagelka bridges economics and psychology, exploring how personality traits influence economic preferences, in Journal of Political Economy.

Autoregressive Conditional Betas by Francisco Blasques, Christian Francq, and Sébastien Laurent provides innovative methods to measure financial risk, critical for investment strategies, in Journal of Econometrics.

Model-based vs. Agnostic Methods for the Prediction of Time-Varying Covariance Matrices by Jean-David Fermanian, Benjamin Poignard, and Panos Xidonas compares methodologies for improving financial predictions under uncertainty, in Annals of Operations Research.

Corporate Debt Value Under Transition Scenario Uncertainty by Theo Le Guedenal and Peter Tankov addresses the valuation of corporate debt amid environmental and regulatory changes, in Mathematical Finance.

Semiparametric Copula Models Applied to the Decomposition of Claim Amounts by Sébastien Farkas and Olivier Lopez develops new actuarial techniques to better understand insurance claims, in Scandinavian Actuarial Journal.

On the Chaotic Expansion for Counting Processes by Caroline Hillairet and Anthony Réveillac advances mathematical models with applications in finance and beyond, in Electronic Journal of Probability.

Russia’s Invasion of Ukraine and Perceived Intergenerational Mobility in Europe by Alexi Gugushvili and Patrick Präg examines how geopolitical shocks affect societal perceptions and mobility, in British Journal of Sociology.

The Total Effect of Social Origins on Educational Attainment: Meta-analysis of Sibling Correlations From 18 Countries by Lewis R. Anderson, Patrick Präg, Evelina T. Akimova, and Christiaan Monden provides a meta-analysis of sibling correlations, offering fresh insights into education and inequality, in Demography.

Context Matters When Evacuating Large Cities: Shifting the Focus from Individual Characteristics to Location and Social Vulnerability by Samuel Rufat, Emeline Comby, Serge Lhomme, and Victor Santoni shifts the focus from individual characteristics to social vulnerabilities during urban evacuations, in Environmental Science and Policy.

Gender Equality for Whom? The Changing College Education Gradients of the Division of Paid Work and Housework Among US Couples, 1968-2019 by Léa Pessin explores shifting dynamics in gendered divisions of labor among U.S. couples over the decades, in Social Forces.

The Augmented Social Scientist: Using Sequential Transfer Learning to Annotate Millions of Texts with Human-Level Accuracy by Salomé Do, Etienne Ollion, and Rubing Shen highlights how AI tools can assist in large-scale sociological research with human-level accuracy, in Sociological Methods and Research.

Investigating Swimming Technical Skills by a Double Partition Clustering of Multivariate Functional Data Allowing for Dimension Selection, by Antoine Bouvet, Salima El Kolei, Matthieu Marbac, in Annals of Applied Statistics.

Full-model estimation for non-parametric multivariate finite mixture models, by Marie Du Roy de Chaumaray, Matthieu Marbac, in Journal of the Royal Statistical Society. Series B: Statistical Methodology

Tail Inverse Regression: Dimension Reduction for Prediction of Extremes, by Anass Aghbalou, François Portier, Anne Sabourin, Chen Zhou, in Bernoulli.

Proxy-analysis of the genetics of cognitive decline in Parkinson’s disease through polygenic scores, by Johann Faouzi, Manuela Tan, Fanny Casse, Suzanne Lesage, Christelle Tesson, Alexis Brice, Graziella Mangone, Louise-Laure Mariani, Hirotaka Iwaki, Olivier Colliot, Lasse Pihlstrom, Jean-Christophe Corvol, in NPJ Parkinson’s Disease.

Benign Overfitting and Adaptive Nonparametric Regression, by Julien Chhor, Suzanne Sigalla, Alexandre Tsybakov, in Probability Theory and Related Fields.

🎯 Discover more CREST publications on our HAL webpage.

🌍 Impactful Events and Conferences

CREST actively participated in and hosted events that fostered collaboration and knowledge exchange:

- European Parliament Panel: Sociologist Paola Tubaro led a pivotal discussion on alternatives to platform-driven gig economies, bringing sociological insights to policy discussions.

- Publication by the National Courts of Audit: Barometer of Fiscal and Social Contributions in France – Second Edition 2023.

- NeurIPS 2024: CREST had a strong presence with 19 papers selected, showcasing cutting-edge work in artificial intelligence and neural information processing.

- Cyber-Risk Conference Cyr2fi: Co-organized with École Polytechnique, this event highlighted the interdisciplinary approaches needed to address growing cybersecurity threats.

- Nobel Prize Lecture: Researchers and PhDs of IP Paris Economics Department celebrated the 2023 Nobel Prize in Economics, reinforcing academic excellence.

📅 Join future events: Visit our calendar.

2024 brought two new chairs at CREST:

- Cyclomob by Marion Leroutier highlights research into sustainable urban mobility, funded through a regional chair.

- Impact Investing Chair by Olivier-David Zerbib to maximize the positive impact of the investment on the environment and society.

🏆 Awards and Recognitions

2024 was a year of accolades for CREST:

- 5 ERC Grants for CREST in 2024: Yves Le Yaouanq has recently joined the 2024 group of ERC grantees, which already includes Samuel Rufat, Olivier Gossner, Julien Combe, and Marion Goussé.

- CNRS Bronze Medal: Clément Malgouyres for contributions to labor economics.

- L’Oréal-UNESCO Young Talent: Solenne Gaucher recognized for her sustainable development work.

- EALE Young Labor Economist Prize: Federica Meluzzi for innovative labor market studies.

- 2024 AEJ Best Paper Awards in Macroeconomics: Giovanni Ricco wins the award for his paper “The transmission of Monetary Policy Shocks” with Silvia Miranda-Agrippino.

- Louis Bachelier Prize: Peter Tankov honored for achievements in mathematical finance.

In 2024, some CREST researchers were also appointed in diverse institutions:

- The Economic Journal: Roland Rathelot was appointed Managing Editor.

- The Econometric Society: Olivier Gossner was named Fellow of the Econometric Society.

- French Ministry of Economics: Franck Malherbet appointed as a member of the Expert Group on the Minimum Growth Wage.

📚 Books and Projects

This year, CREST researchers authored several impactful books:

- Ce qui échappe à l’intelligence artificielle, edited by François Levin and Étienne Ollion, critically examines the limits of AI in understanding human complexity.

- Peut-on être heureux de payer des impôts ? by Pierre Boyer engages readers in a thought-provoking discussion on the role of taxation in society.

- Introduction aux Sciences Économiques, cours de première année à l’Ecole polytechnique by Olivier Gossner et al. serves as an accessible entry point for students into economic principles.

- Une étrange victoire, l’extrême droite contre la politique by Michaël Foessel and Étienne Ollion explores the relationship between politics and far-right ideologies.

2024 was also marked by the second series of the Beyond the PhD series, a series of videos dedicated to the PhD course. In 2024, we were able to explore the evolution of the PhD definition through students currently in different years of their studies in all CREST research clusters.

📣 Media and Outreach

CREST researchers were featured in:

- 80+ media outlets, including Le Monde, Le Nouvel Obs, Les Échos, University World News, BBC News Brazil, France Culture, Libération, Le Cercles des Économistes, Médiapart, AOC…

- 30+ op-eds and articles, shaping public discourse.

🎙️ Featured Interview: Pauline Rossi discusses economic inequalities in Le Cercle des Économistes. Listen here.

CREST celebrates a year of remarkable achievements and meaningful contributions to research, society, and global conversations. From groundbreaking publications to prestigious awards and impactful events, our community has continued to push boundaries and inspire innovation.

Looking ahead to 2025, we remain committed to fostering interdisciplinary research, addressing societal challenges, and nurturing a collaborative environment for researchers and students.

2024 CREST Highlights

As 2024 draws to a close, CREST reflects on a year filled with groundbreaking research, prestigious awards, and impactful initiatives. Here’s a look back at our key achievements.

📊 Research Breakthroughs: 93 Articles Published

CREST published 93 articles so far, with 62% appearing in Q1 journals. These works reflect the breadth and depth of research conducted across CREST’s clusters. Here are some highlights:

Information Technology and Returns to Scale by Danial Lashkari, Arthur Bauer, and Jocely Boussard explores how technological advancements influence economies of scale, shedding light on contemporary production practices, in American Economic Review

Locus of Control and the Preference for Agency by Marco Caliendo, Deborah Cobb-Clark, Juliana Silva-Goncalves, and Arne Uhlendorff investigates how personal traits shape individuals’ economic decisions, providing a deeper understanding of agency in economic behavior, in European Economic Review.

Global Mobile Inventors by Dany Bahar, Prithwiraj Choudhury, Ernest Miguelez, and Sara Signorelli examines the migration patterns of innovative talent worldwide, offering new perspectives on innovation dynamics, in Journal of Development Economics.

Testing and Relaxing the Exclusion Restriction in the Control Function Approach by Xavier D’Haultfoeuille, Stefan Hordelein, and Yuya Sasaki provides advanced methodologies to enhance econometric analysis, in Journal of Econometrics.

Are Economists’ Preferences Psychologists’ Personality Traits? A Structural Approach by Tomas Jagelka bridges economics and psychology, exploring how personality traits influence economic preferences, in Journal of Political Economy.

Autoregressive Conditional Betas by Francisco Blasques, Christian Francq, and Sébastien Laurent provides innovative methods to measure financial risk, critical for investment strategies, in Journal of Econometrics.

Model-based vs. Agnostic Methods for the Prediction of Time-Varying Covariance Matrices by Jean-David Fermanian, Benjamin Poignard, and Panos Xidonas compares methodologies for improving financial predictions under uncertainty, in Annals of Operations Research.

Corporate Debt Value Under Transition Scenario Uncertainty by Theo Le Guedenal and Peter Tankov addresses the valuation of corporate debt amid environmental and regulatory changes, in Mathematical Finance.

Semiparametric Copula Models Applied to the Decomposition of Claim Amounts by Sébastien Farkas and Olivier Lopez develops new actuarial techniques to better understand insurance claims, in Scandinavian Actuarial Journal.

On the Chaotic Expansion for Counting Processes by Caroline Hillairet and Anthony Réveillac advances mathematical models with applications in finance and beyond, in Electronic Journal of Probability.

Russia’s Invasion of Ukraine and Perceived Intergenerational Mobility in Europe by Alexi Gugushvili and Patrick Präg examines how geopolitical shocks affect societal perceptions and mobility, in British Journal of Sociology.

The Total Effect of Social Origins on Educational Attainment: Meta-analysis of Sibling Correlations From 18 Countries by Lewis R. Anderson, Patrick Präg, Evelina T. Akimova, and Christiaan Monden provides a meta-analysis of sibling correlations, offering fresh insights into education and inequality, in Demography.

Context Matters When Evacuating Large Cities: Shifting the Focus from Individual Characteristics to Location and Social Vulnerability by Samuel Rufat, Emeline Comby, Serge Lhomme, and Victor Santoni shifts the focus from individual characteristics to social vulnerabilities during urban evacuations, in Environmental Science and Policy.

Gender Equality for Whom? The Changing College Education Gradients of the Division of Paid Work and Housework Among US Couples, 1968-2019 by Léa Pessin explores shifting dynamics in gendered divisions of labor among U.S. couples over the decades, in Social Forces.

The Augmented Social Scientist: Using Sequential Transfer Learning to Annotate Millions of Texts with Human-Level Accuracy by Salomé Do, Etienne Ollion, and Rubing Shen highlights how AI tools can assist in large-scale sociological research with human-level accuracy, in Sociological Methods and Research.

Investigating Swimming Technical Skills by a Double Partition Clustering of Multivariate Functional Data Allowing for Dimension Selection, by Antoine Bouvet, Salima El Kolei, Matthieu Marbac, in Annals of Applied Statistics.

Full-model estimation for non-parametric multivariate finite mixture models, by Marie Du Roy de Chaumaray, Matthieu Marbac, in Journal of the Royal Statistical Society. Series B: Statistical Methodology

Tail Inverse Regression: Dimension Reduction for Prediction of Extremes, by Anass Aghbalou, François Portier, Anne Sabourin, Chen Zhou, in Bernoulli.

Proxy-analysis of the genetics of cognitive decline in Parkinson’s disease through polygenic scores, by Johann Faouzi, Manuela Tan, Fanny Casse, Suzanne Lesage, Christelle Tesson, Alexis Brice, Graziella Mangone, Louise-Laure Mariani, Hirotaka Iwaki, Olivier Colliot, Lasse Pihlstrom, Jean-Christophe Corvol, in NPJ Parkinson’s Disease.

Benign Overfitting and Adaptive Nonparametric Regression, by Julien Chhor, Suzanne Sigalla, Alexandre Tsybakov, in Probability Theory and Related Fields.

🎯 Discover more CREST publications on our HAL webpage.

🌍 Impactful Events and Conferences

CREST actively participated in and hosted events that fostered collaboration and knowledge exchange:

- European Parliament Panel: Sociologist Paola Tubaro led a pivotal discussion on alternatives to platform-driven gig economies, bringing sociological insights to policy discussions.

- Publication by the National Courts of Audit: Barometer of Fiscal and Social Contributions in France – Second Edition 2023.

- NeurIPS 2024: CREST had a strong presence with 19 papers selected, showcasing cutting-edge work in artificial intelligence and neural information processing.

- Cyber-Risk Conference Cyr2fi: Co-organized with École Polytechnique, this event highlighted the interdisciplinary approaches needed to address growing cybersecurity threats.

- Nobel Prize Lecture: Researchers and PhDs of IP Paris Economics Department celebrated the 2023 Nobel Prize in Economics, reinforcing academic excellence.

📅 Join future events: Visit our calendar.

2024 brought two new chairs at CREST:

- Cyclomob by Marion Leroutier highlights research into sustainable urban mobility, funded through a regional chair.

- Impact Investing Chair by Olivier-David Zerbib to maximize the positive impact of the investment on the environment and society.

🏆 Awards and Recognitions

2024 was a year of accolades for CREST:

- 5 ERC Grants for CREST in 2024: Yves Le Yaouanq has recently joined the 2024 group of ERC grantees, which already includes Samuel Rufat, Olivier Gossner, Julien Combe, and Marion Goussé.

- CNRS Bronze Medal: Clément Malgouyres for contributions to labor economics.

- L’Oréal-UNESCO Young Talent: Solenne Gaucher recognized for her sustainable development work.

- EALE Young Labor Economist Prize: Federica Meluzzi for innovative labor market studies.

- 2024 AEJ Best Paper Awards in Macroeconomics: Giovanni Ricco wins the award for his paper “The transmission of Monetary Policy Shocks” with Silvia Miranda-Agrippino.

- Louis Bachelier Prize: Peter Tankov honored for achievements in mathematical finance.

In 2024, some CREST researchers were also appointed in diverse institutions:

- The Economic Journal: Roland Rathelot was appointed Managing Editor.

- The Econometric Society: Olivier Gossner was named Fellow of the Econometric Society.

- French Ministry of Economics: Franck Malherbet appointed as a member of the Expert Group on the Minimum Growth Wage.

📚 Books and Projects

This year, CREST researchers authored several impactful books:

- Ce qui échappe à l’intelligence artificielle, edited by François Levin and Étienne Ollion, critically examines the limits of AI in understanding human complexity.

- Peut-on être heureux de payer des impôts ? by Pierre Boyer engages readers in a thought-provoking discussion on the role of taxation in society.

- Introduction aux Sciences Économiques, cours de première année à l’Ecole polytechnique by Olivier Gossner et al. serves as an accessible entry point for students into economic principles.

- Une étrange victoire, l’extrême droite contre la politique by Michaël Foessel and Étienne Ollion explores the relationship between politics and far-right ideologies.

2024 was also marked by the second series of the Beyond the PhD series, a series of videos dedicated to the PhD course. In 2024, we were able to explore the evolution of the PhD definition through students currently in different years of their studies in all CREST research clusters.

📣 Media and Outreach

CREST researchers were featured in:

- 80+ media outlets, including Le Monde, Le Nouvel Obs, Les Échos, University World News, BBC News Brazil, France Culture, Libération, Le Cercles des Économistes, Médiapart, AOC…

- 30+ op-eds and articles, shaping public discourse.

🎙️ Featured Interview: Pauline Rossi discusses economic inequalities in Le Cercle des Économistes. Listen here.

CREST celebrates a year of remarkable achievements and meaningful contributions to research, society, and global conversations. From groundbreaking publications to prestigious awards and impactful events, our community has continued to push boundaries and inspire innovation.

Looking ahead to 2025, we remain committed to fostering interdisciplinary research, addressing societal challenges, and nurturing a collaborative environment for researchers and students.

19 CREST Papers selected for the 2024 NeurIPS Conference on Neural Information Processing Systems

In December 2024, CREST researchers and PhD students, will present their last papers during the 2024 NeurIPS conference that will be held in Vancouver, Canada.

About the NeurIPS Conference

Founded in 1987, the conference has evolved into a prominent annual interdisciplinary event, featuring multiple tracks that include invited talks, demonstrations, symposia, and oral and poster presentations of peer-reviewed papers.

In addition to the main program, the event hosts a professional exhibition highlighting real-world applications of machine learning, as well as tutorials and topical workshops designed to foster the exchange of ideas in a more informal setting.

NeurIPS, alongside ICML, ranks among the top three most prestigious international conferences in Artificial Intelligence.

CREST’s papers to be presented

The conference’s focus resonates with CREST’s contributions to AI, particularly in applying statistical and mathematical frameworks to emerging challenges. CREST researchers have developed innovative methods in areas like optimal transport, reinforcement learning, and auction theory.

19 papers from CREST researchers and PhD students will be presented during the conference.

If you want to check papers to be presented during NeurIPS 2024, please visit: https://nips.cc/virtual/2024/papers.html?filter=titles

Source: https://neurips.cc/About

19 CREST Papers selected for the 2024 NeurIPS Conference on Neural Information Processing Systems

In December 2024, CREST researchers and PhD students, will present their last papers during the 2024 NeurIPS conference that will be held in Vancouver, Canada.

About the NeurIPS Conference

Founded in 1987, the conference has evolved into a prominent annual interdisciplinary event, featuring multiple tracks that include invited talks, demonstrations, symposia, and oral and poster presentations of peer-reviewed papers.

In addition to the main program, the event hosts a professional exhibition highlighting real-world applications of machine learning, as well as tutorials and topical workshops designed to foster the exchange of ideas in a more informal setting.

NeurIPS, alongside ICML, ranks among the top three most prestigious international conferences in Artificial Intelligence.

CREST’s papers to be presented

The conference’s focus resonates with CREST’s contributions to AI, particularly in applying statistical and mathematical frameworks to emerging challenges. CREST researchers have developed innovative methods in areas like optimal transport, reinforcement learning, and auction theory.

19 papers from CREST researchers and PhD students will be presented during the conference.

If you want to check papers to be presented during NeurIPS 2024, please visit: https://nips.cc/virtual/2024/papers.html?filter=titles

Source: https://neurips.cc/About

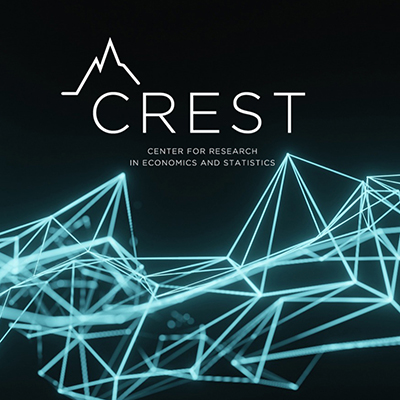

Interview – Solenne Gaucher, lauréate 2024 du prix L’Oréal Unesco pour les femmes et la science

Solenne Gaucher, post-doctorante statisticienne, au CREST-GENES a récemment obtenu le prestigieux prix L’Oréal Unesco pour les femmes et la science. Elle s’intéresse principalement à l’apprentissage séquentiel et aux problèmes de décision séquentielle, ainsi qu’à l’apprentissage automatique équitable.

Au sein du CREST, Solenne travaille avec Vianney Perchet, chercheur en statistique et enseignant à l’ENSAE Paris sur le projet FairPlay, en partenariat avec l’INRIA.

Nous avons saisi l’occasion de ce prix pour en savoir un peu plus sur Solenne, son parcours et son travail au CREST.

Félicitations pour ce prix prestigieux ! Que représente pour vous le fait de recevoir le prix L’Oréal Unesco pour les femmes et la science ?

Je suis profondément honorée de recevoir ce prix, décerné par un jury aussi prestigieux. Au-delà de la reconnaissance de mes travaux, ce prix s’accompagne d’une responsabilité que je prends à cœur. En tant qu’ambassadrice de la Fondation L’Oréal-Unesco pour les Femmes et la Science, je souhaite soutenir activement sa mission : promouvoir une plus grande inclusion des femmes dans le domaine scientifique, qui est un enjeu fondamental pour l’égalité des genres.

A votre avis, quelles sont les raisons de la sous-représentation des femmes en science, et quel impact une meilleure représentativité pourrait-elle avoir ?

Le nombre de femmes dans les domaines scientifiques reste alarmant. En France, elles ne représentent que 29% des chercheurs en sciences, et cette proportion chute encore davantage dans des disciplines comme les mathématiques, où seulement 22% des enseignantes-chercheuses à l’université sont des femmes. Ces chiffres, bien qu’ils reflètent la faible présence des femmes dans les filières scientifiques, en sont également une des causes.

Je suis convaincue que le manque de modèles féminins visibles dans l’enseignement supérieur peut freiner les ambitions des étudiantes, les poussant à douter de leur légitimité. A cela s’ajoutent d’autres facteurs :

- Un soutien insuffisant de l’entourage pour s’engager dans des carrières scientifiques ;

- Un sentiment d’illégitimité dans un environnement perçu comme très masculin ;

- Une aversion plus marquée pour la compétition, qui peut éloigner certaines jeunes femmes de filières considérées comme élitistes.

Cette situation est préoccupante, car elle freine l’égalité des genres dans notre société. Les études scientifiques ouvrent les portes à des carrières parmi les plus prestigieuses et les mieux rémunérées. Pour y remédier, il est indispensable de mener des actions ciblées tout au long du parcours des jeunes femmes, dès la petite enfance, en luttant contre les stéréotypes de genre, et jusqu’aux études supérieures, en les conseillant sur leur orientation et en les encourageant activement à se tourner vers des carrières scientifiques.

Comment pensez-vous que ce prix influencera votre carrière et vos projets de recherche futurs ?

En tant que jeune chercheuse, ce prix apporte à la fois une reconnaissance et une visibilité précieuse à mes travaux. Plus matériellement, ce prix conséquent me donne une certaine indépendance financière, et me permettra notamment de financer un séjour de recherche à l’étranger, de voyager pour présenter mes travaux et nouer des collaborations avec des chercheurs de divers horizons. Ce prix ouvre donc de nouvelles perspectives pour mes projets de recherche.

Vous avez obtenu ce prix dans le cadre de votre travail sur l’équité algorithmique. Pouvez-vous nous présenter ces travaux ?

Mes travaux portent sur les enjeux d’équité dans les algorithmes d’apprentissage automatique, ou machine learning. Pour comprendre pourquoi ces algorithmes peuvent produire des réponses biaisées, il est essentiel de comprendre leur fonctionnement : ils apprennent à partir de larges jeux de données à repérer des relations entre différents éléments décrits par ces données. Par exemple, un logiciel de génération de texte associe certains mots à des contextes spécifiques et reproduit ces associations dans ses réponses.

Cependant, si les données d’entraînement contiennent des biais, les algorithmes risquent de les reproduire, voire de les accentuer. Par exemple, des journalistes de Reuters ont rapporté qu’un algorithmes de recrutement d’ingénieurs, entraîné sur des données historiques de recrutement, reproduisait les biais présents dans les données en discriminant les candidatures féminines. De tels cas illustrent un consensus croissant parmi les scientifiques : les algorithmes de machine learning, lorsqu’ils sont formés sur des données biaisées, peuvent perpétuer ou aggraver les discriminations déjà présentes.

Mes recherches visent à mesurer et prévenir les discriminations des algorithmes. La complexité du sujet dépasse son aspect purement mathématique : une décision juste dans un contexte peut sembler injuste dans un autre, ce qui nécessité de développer différentes approches complémentaires. A court terme, le souhaite étudier certains critères d’équité spécifiques, et en particulier comprendre les conséquences de ces contraintes, et comment les intégrer dans les algorithmes. A long terme, mes recherches visent à contribuer à l’élaboration d’un boîte à outils documentée d’algorithmes équitables. Celle-ci fournirait aux décideurs des méthodes pratiques pour mettre en œuvre tel ou tel critère tout en expliquant les conséquences et les effets néfastes potentiels de ces choix.

Pouvez-vous nous expliquer ce qui vous a motivé à explorer le domaine de l’équité dans les algorithmes d’intelligence artificielle ?

Ce domaine de recherche présente deux intérêts majeurs à mes yeux. D’une part, il permet de répondre à un problème ayant un impact sociétal significatif, où les mathématiciens et mathématiciennes ont un rôle clé à jouer. D’autre part, il s’agit d’un champ de recherche émergeant et particulièrement dynamique. De nombreuses questions fondamentales restent ouvertes, et ce domaine présente des défis mathématiques vraiment captivants.

Qu’est-ce qui vous a poussé à choisir une carrière de chercheuse en statistiques et en mathématiques appliquées ?

J’ai choisi de faire une carrière scientifique par passion pour les mathématiques. Je n’avais pas de métier précis en tête en commençant mes études, et pas d’autre but en tête que celui de continuer à pratiquer des sciences. Je ne visais pas un métier en particulier, mais je savais que ces études m’ouvraient de nombreuses portes. J’ai suivi, à chaque choix d’orientation, la voie qui me permettait de continuer à faire des mathématiques, qui me plaisaient particulièrement. J’ai donc assez naturellement fait un master de recherche, qui m’a conduit à faire une thèse.

J’aime particulièrement la liberté que le métier de chercheuse m’offre. En premier lieu, la liberté de travailler sur les problèmes qui m’intéressent, et de continuer à apprendre de nouvelles techniques, à découvrir de nouveaux sujets. Et, bien sûr, la liberté de choisir avec qui travailler. C’est aussi un métier qui procure un vrai plaisir intellectuel : celui de modéliser un problème réel en termes mathématiques, de réfléchir et de raisonner, de comprendre !

L’enseignement est également une partie de ce métier que je trouve vraiment gratifiante, car elle permet de mesurer immédiatement l’impact de son travail, à travers les progrès visibles de ses élèves.