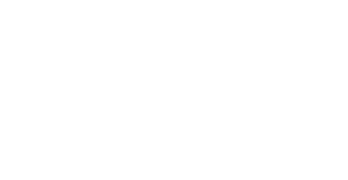

The American Economic Association announced the list of Award Reciptients for 2024.

Giovanni Ricco, Professor at Ecole polytechnique, received the annual American Economic Journal (AEJ) Best Paper Awards for his work with Silvia Miranda-Agrippino, Research Economist in the Monetary Policy Division at the Federal Reserve Bank of New York and a Research Affiliate in the Monetary Economics and Fluctuations (MEF) programme of the CEPR. The paper was published in 2021, in the American Economic Journal: Macroeconomics, while Giovanni Ricco was a Professor in the Department of Economics at University of Warwick.

The annual American Economic Journal (AEJ) Best Paper Awards highlight the best paper published in each of the American Economic Journals: Applied Economics, Economic Policy, Macroeconomics, and Microeconomics over the last three years. Nominations are provided by AEA members, and winners are selected by the journals’ Boards of Editors. Complimentary full-text articles are available at https://www.aeaweb.org/about-aea/honors-awards/aej-best-papers.

“The transmission of Monetary Policy Shocks” by Silvia Miranda-Agrippino and Giovanni Ricco – 13(3), (pp. 74-107) July 2021

Commonly used instruments for the identification of monetary policy disturbances are likely to combine the true policy shock with information about the state of the economy due to the information disclosed through the policy action. We show that this signaling effect of monetary policy can give rise to the empirical puzzles reported in the literature, and propose a new high-frequency instrument for monetary policy shocks that accounts for informational rigidities. We find that a monetary tightening is unequivocally contractionary, with deterioration of domestic demand, labor and credit market conditions as well as of asset prices and agents’ expectations.

More information on the article: The Transmission of Monetary Policy Shocks

Alicia Bassière wins second place at the Rencontres Technology for Change 2024.

Alicia Bassière is a 4th year PhD student at CREST-Ecole polytechnique in energy economics, specializing mainly in the electricity market, supervised by David Benatia and Peter Tankov.

Alicia is committed to the fight against global warming, focusing on energy as a fundamental component.

For the third edition of the Rencontres Technology for Change, Alicia won second place during the Pitch for Change competition based on the 180-second thesis principle.

Alicia was able to present her thesis work, which involves modeling uncertainty in long-term investments in power generation capacity. In particular, she is working on various sources of uncertainty: electricity consumption, fuel prices, the number of players in the market and renewable production. Alicia uses advanced probabilistic methods for this, which she has popularized as tarot cards.

Beyond her thesis, Alicia is passionate about making economic research and the energy transition accessible to the general public. To this end, she actively participates in events promoting science and collaborates with journalists. In 2022, she joined the TF1 Group’s Committee of Environmental Experts to improve journalists’ understanding of energy and climate issues, occasionally appearing on the French TV channel LCI to share ideas.

As part of her scientific activity, Alicia Bassière is working on several research projects, jointly with her thesis supervisors:

– Moving forward blindly: capacity planning, uncertainty and environmental targets (joint work with David Benatia)

– A mean-field game model of electricity market dynamics (joint work with Peter Tankov and Roxana Dumitrescu).

Discover more of Alicia Bassière on her website: https://sites.google.com/view/alicia-bassiere/accueil?authuser=0

Alicia Bassière wins second place at the Rencontres Technology for Change 2024.

Alicia Bassière is a 4th year PhD student at CREST-Ecole polytechnique in energy economics, specializing mainly in the electricity market, supervised by David Benatia and Peter Tankov.

Alicia is committed to the fight against global warming, focusing on energy as a fundamental component.

For the third edition of the Rencontres Technology for Change, Alicia won second place during the Pitch for Change competition based on the 180-second thesis principle.

Alicia was able to present her thesis work, which involves modeling uncertainty in long-term investments in power generation capacity. In particular, she is working on various sources of uncertainty: electricity consumption, fuel prices, the number of players in the market and renewable production. Alicia uses advanced probabilistic methods for this, which she has popularized as tarot cards.

Beyond her thesis, Alicia is passionate about making economic research and the energy transition accessible to the general public. To this end, she actively participates in events promoting science and collaborates with journalists. In 2022, she joined the TF1 Group’s Committee of Environmental Experts to improve journalists’ understanding of energy and climate issues, occasionally appearing on the French TV channel LCI to share ideas.

As part of her scientific activity, Alicia Bassière is working on several research projects, jointly with her thesis supervisors:

– Moving forward blindly: capacity planning, uncertainty and environmental targets (joint work with David Benatia)

– A mean-field game model of electricity market dynamics (joint work with Peter Tankov and Roxana Dumitrescu).

Discover more of Alicia Bassière on her website: https://sites.google.com/view/alicia-bassiere/accueil?authuser=0

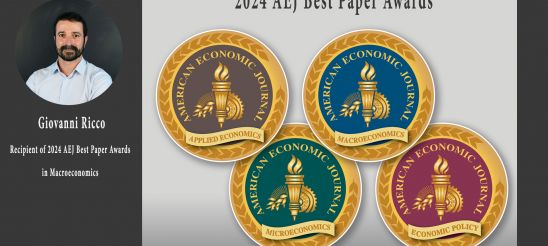

Sortie : “Peut-on être heureux de payer des impôts ?” un livre de Pierre Boyer, publié aux éditions PUF

Collection : Défis Économie

Date de parution : 03/04/2024

Résumé :

Peut-on être heureux de payer des impôts ? La réponse qui s’impose semble ne pouvoir être que négative. On peut même se demander si ce titre ne constitue pas une provocation.

Pierre Boyer, sélectionné pour le Prix du meilleur jeune économiste, montre que la relation des citoyens-contribuables à leurs impôts est complexe, et qu’il est possible de vivre « heureux » dans un pays à haute pression fiscale. A la condition toutefois de connaître les fragilités du consentement à l’impôts, de ne jamais le considérer comme acquis.

A l’appui d’enquête et de résultats inédits auprès de la population française, ce livre propose des manières concrètes pour améliorer ce consentement et pour rendre peut-être moins amers d’en payer.

https://www.puf.com/peut-etre-heureux-de-payer-des-impots

Sortie : “Peut-on être heureux de payer des impôts ?” un livre de Pierre Boyer, publié aux éditions PUF

Collection : Défis Économie

Date de parution : 03/04/2024

Résumé :

Peut-on être heureux de payer des impôts ? La réponse qui s’impose semble ne pouvoir être que négative. On peut même se demander si ce titre ne constitue pas une provocation.

Pierre Boyer, sélectionné pour le Prix du meilleur jeune économiste, montre que la relation des citoyens-contribuables à leurs impôts est complexe, et qu’il est possible de vivre « heureux » dans un pays à haute pression fiscale. A la condition toutefois de connaître les fragilités du consentement à l’impôts, de ne jamais le considérer comme acquis.

A l’appui d’enquête et de résultats inédits auprès de la population française, ce livre propose des manières concrètes pour améliorer ce consentement et pour rendre peut-être moins amers d’en payer.

https://www.puf.com/peut-etre-heureux-de-payer-des-impots

CRESTive Minds – Episode 5 : Claire Ecotière and Emilien Schultz, new data engineers at CREST

Claire Ecotière and Emilien Schultz recently joined CREST team as data engineers. We wanted to take this opportunity to highlight their activities and what they could bring out to CREST researchers.

- Claire, you have joined CREST by the end of 2023 and Emilien you arrived in the beginning of 2024. Could you introduce yourselves, where are you from? What did you do before joining CREST?

Claire > I am originally from the Paris region, and I pursued a mathematics curriculum with the learning of several programming languages. My academic path led me to pursue a thesis in applied mathematics at CMAP[1] at Polytechnique focusing on modeling human behavior in the context of climate change. During my thesis, I was able to deepen my appetite for coding and interaction with researchers. I defended my thesis at the end of October, just before starting at CREST in early November 2023.

Emilien > Before joining CREST as a data scientist, I had several lives. Initially trained in applied physics at ENS Paris-Saclay, I defended my PhD thesis in sociology at Paris Sorbonne in 2016 on research policies. I then conducted research in social sciences of health for several years, with a focus on cancer area. With the COVID-19 outburst, I got involved in research studying controversies on science and medicine in the public space. Through all these activities, I maintained a common thread of interdisciplinary collaborations on digital data. This led me to get involved in a reflection on scientific programming in Python for data analysis, ranging from statistical methods to software development. It led me to publish a handbook in 2021 on Python for the social sciences[2]. It was a milestone in my journey to connect data, programming, and social sciences, which I’m fortunate enough to continue by joining GENES[3] and CREST.

- Why is it necessary and promising for a research laboratory like CREST to support researchers in the production and analysis of data?

Claire > In reality, the use of data is crucial for anchoring a research question. When discussing data, it’s imperative to consider the context in which they were acquired, including potential biases or quality. Some research questions may gather data through surveys or economic games. With the experimental laboratory (IPEL) here at CREST, I can produce experimental data. I can code the experiment, run the experiment online or here at CREST and help with the data management. By encouraging data production and analysis at CREST, we aim to improve the traceability and quality of the data used, thereby enhancing the resulting research.

Emilien > Data is consubstantial with scientific activity. But the word itself can be rather misleading, as it encompasses very different approaches and entities. When we talk about data these days, what we really mean is the proliferation of digital data and the IT tools required to process it. The acceleration and new constraints on research activities mean that new professionals need to intervene in the scientific work, whether to provide IT services, specialize in digital approaches to different disciplines, or build and deploy new software tools. While many researchers develop their own expertise in these approaches, in some cases this can be a heavy investment, both in terms of time and work. So, it’s worthwhile for a laboratory to facilitate both use and development, by helping with the more technical aspects.

- (Claire) How do you plan to proactively engage with researchers to identify potential areas where your skills could enhance their work?

I can assist researchers on two fronts:

- data production through testing economic models

- data analysis.

Both aspects are essential in the study of a research question. Therefore, I plan to disseminate my support to researchers through discussions and presentations.

First concerning the production of data, I can help the researchers or handle their production.

Second, helping with data analysis can save time for the researcher.

Furthermore, based on successful projects, I will redefine my offer and the methodology.

- (Emilien) How do you plan to initiate conversations with researchers to identify opportunities where computational social science methods can enhance their studies?

I have the feeling that the role of facilitator of research on digital data should intervene on two levels.

The first is to help answer questions already existing, and bring them to an operationalization strategy: indeed, researchers are specialists in their field, and see the arrival of new methods, whether using new data or software. The challenge is to assess feasibility and to map out available resources. So, they already have questions, and are looking for answers. It’s a good idea to have a door to knock on, to discuss and clarify these questions and needs as much as possible.

The second level is to open the space of possibilities, especially for research that’s just starting out. I think the best way to do this is to regularly present uses and tools in the form of continuous monitoring from a discovery angle, providing resources to take things further for those who want to dig deeper. This can be achieved by hosting events specifically devoted to digital methods, be they practice groups or dedicated seminars.

- Can you share an example of how you’ve successfully collaborated with researchers in the past to encourage them to explore new data-driven approaches?

Claire > During my doctoral studies, I collaborated with researchers. As my position at CREST marks the beginning of my post-doctoral career, this question is premature. I fully intend to develop collaborations at CREST and address this matter next year.

Emilien > My philosophy is always to start from needs. For example, I have often helped to transform existing data into a format suitable for specific software, for example in language processing or network analysis. I have also been involved in setting up data collection protocols (e.g. web scrapping). In some cases, when there was a need for a ready-made tool, I developed small applications that could easily be used for processing, thus facilitating the exploration of a dataset. More recently, I’m trying to help you choose the right strategies for processing textual data, because with the advent of language models, the range of existing tools has greatly expanded.

- How do you plan to make your expertise and the potential of data more accessible to researchers who may not have a strong background in data science?

Claire > In the realm of data production and analysis, my primary tools include: the otree package in Python, Stata, R, and data-lab servers. I aim to foster the growth of experiments at CREST. To achieve this, it’s crucial to offer training sessions on the usage of these tools and develop comprehensive documentation. Another approach would involve delivering simplified presentations on various projects conducted, elucidating the significance of conducting experiments at CREST.

Emilien > Before you can process data, you first need to want to do it, and then you need to find the means to do it. Digital tools are constantly evolving, especially in the context of open source. This means that you have to keep up to date. I’m convinced that there are two different aspects to this: the first is to enable everyone to have a basic culture of digital data processing; the second is to be able to help to identify relevant materials to progress to autonomy. I therefore believe it’s important to provide ongoing training in data processing practices, so that everyone can get started when they want, in particular through regular training courses on tools (programming basics, the command line, code versioning, notebooks, etc.), and to plan a personalized exchange on needs to guide people towards the most appropriate resources.

- Are there specific strategies you employ to encourage researchers to consider new or more recent options into their projects?

Claire > I rely on word of mouth and presentations within the economics cluster. I plan to interact more with the sociologist too as my work can be helpful for them. Knowing that an option exists is crucial in considering a change. I’ve already begun launching experiments, and I count on these successful examples to persuade those who might be interested in conducting experiments. Additionally, I’ve drafted procedures to illustrate how collaborations are structured and what researchers stand to gain from them.

Emilien > I don’t know if you’d call it a strategy, but my priority is to use the data already available to open new perspectives. There’s nothing like a little demo to open the possibilities and make people want to dig deeper. I’m also a fervent supporter of open source and open science, although I’m also convinced that more proprietary approaches are needed in certain cases. Whenever I can, I try to show examples of work that makes the most of the tools. Ideally, well-conducted data processing can lead to three types of use: scientific publications, datasets, and processing steps that enable reproducibility.

- Given the interdisciplinary nature of CREST, how do you see yourself collaborating with researchers from diverse fields to create new connections and exchanges around digital strategies to their projects?

Claire > I am keen to collaborate with all researchers interested in conducting experiments or data analysis. While these experiments may vary in form, fundamentally, they involve researchers with a research question and a desire to gather data to enhance their study. My work can be readily offered to various domains within CREST.

Emilien > I had some experience to talk to different communities, both inside and outside social sciences. I find that the central challenge is to find a common language while accepting that each field comes with its own questions and concepts. Such an exchange is facilitated to some extent by the existence of a common culture of digital tools coming from software engineering. However, this common language has its limits. Indeed, software and packages are often specific to particular disciplines or even currents. It is therefore necessary to take the time to identify the usual tools already in use, in order to propose an appropriate response. It’s impossible to do it without a minimum of discipline-specific knowledge. For example, some communities only use R, so it’s not possible to switch everything over to Python. On the other hand, it may be worthwhile to build a small program to perform the task in question, if it has to be done in Python. In other cases, specific software is used, and it’s not possible to replace it. So we have to find a way to make it works!

- Recently, who did you interact with and what were the conclusions and results? Were you satisfied with them?

Claire > Since I began, I’ve been collaborating with the GENES IT team to revive the experimental laboratory, which had been dormant for two years. Recently, we successfully conducted our first experiment. I engage daily with researchers to develop upcoming online experiments. Additionally, I’ve assisted in analyzing data collected in Japan. Each of these experiences has been positive and has enabled me to better understand the needs of CREST. This allows me to expand the range of possibilities I plan to offer to CREST researchers.

Emilien > Since my arrival, I’ve had the opportunity to immerse myself in some of CREST’s activities, particularly in computational social sciences. I still have a lot to discover, but I’ve already had rich exchanges with researchers and PhD students from the laboratory. Claire and I have also started to create an interface with the GENES IT team, which developing and providing tools adapted to research needs. I’m going to try to feed this exchange as much as possible, by circulating information and problems encountered. In the coming months, I’ll be continuing to observe and consult the laboratory’s researchers, to be able to build up a relevant training offer ranging from the fundamentals of digital tools (such as using Jupyter notebooks or command line interface) to more advanced approaches such as prototyping apps. I hope to be able to do this in collaboration with those who already use those tools, and as such I try to take every opportunity to exchange ideas. An important point will be the presentation of the Onyxia Datalab by the GENES IT team on May 2, at 1:30 pm, which will already provide a first opportunity to launch a discussion on tools and needs. We look forward to seeing you there.

[1] Center for Applied Mathematics, https://cmap.ip-paris.fr/en

[2] https://pur-editions.fr/product/7857/python-pour-les-shs

[3] Groupe des Ecoles Nationales d’Economie et Statistique

Sortie : “Ce qui échappe à l’intelligence artificielle” ouvrage dirigé par François Levin, Étienne Ollion, publié aux éditions Hermann

Paru le 27/03/2024

Collection : Philosophie, Politique et Économie

Thématique : Sciences humaines, philosophie, religion

Présentation :

L’intelligence artificielle est désormais partout, et son développement semble ne connaître aucune limite. Pas un mois ne se passe sans qu’une frontière que l’on pensait insurmontable ne soit allègrement franchie.

Plutôt que de se demander quelle sera la prochaine à être dépassée, ce livre interroge sur ce qui échappe, de manière profonde, à l’IA. Existe-t-il des bornes absolues, au-delà desquelles l’IA ne pourrait se rendre ? Des domaines de la vie qui lui seraient inaccessibles, comme l’amour, la colère, la pensée, la création, la rencontre, la signification ? Ou ces états sont-ils simplement des bornes contingentes, prêtes à être outrepassées grâce au flux de données et à la puissance des algorithmes d’apprentissage machine ? Mais peut-être que la distinction se joue encore ailleurs, non dans des domaines spécifiques, mais dans une certaine expérience du monde qui différerait fondamentalement entre l’humain et la machine.

Pour répondre à ces questions, et afin de comprendre pourquoi nous tenons tant à déterminer des limites à l’intelligence artificielle, ce livre rassemble des contributions interdisciplinaires : recourant à la philosophie, aux sciences sociales et à l’informatique, il tente de donner un sens au sentiment d’étrangeté que nous ressentons face au développement fulgurant des dispositifs intelligents.

Plus d’informations : https://www.editions-hermann.fr/livre/ce-qui-echappe-a-l-intelligence-artificielle-francois-levin

CRESTive Minds – Episode 5 : Claire Ecotière and Emilien Schultz, new data engineers at CREST

Claire Ecotière and Emilien Schultz recently joined CREST team as data engineers. We wanted to take this opportunity to highlight their activities and what they could bring out to CREST researchers.

- Claire, you have joined CREST by the end of 2023 and Emilien you arrived in the beginning of 2024. Could you introduce yourselves, where are you from? What did you do before joining CREST?

Claire > I am originally from the Paris region, and I pursued a mathematics curriculum with the learning of several programming languages. My academic path led me to pursue a thesis in applied mathematics at CMAP[1] at Polytechnique focusing on modeling human behavior in the context of climate change. During my thesis, I was able to deepen my appetite for coding and interaction with researchers. I defended my thesis at the end of October, just before starting at CREST in early November 2023.

Emilien > Before joining CREST as a data scientist, I had several lives. Initially trained in applied physics at ENS Paris-Saclay, I defended my PhD thesis in sociology at Paris Sorbonne in 2016 on research policies. I then conducted research in social sciences of health for several years, with a focus on cancer area. With the COVID-19 outburst, I got involved in research studying controversies on science and medicine in the public space. Through all these activities, I maintained a common thread of interdisciplinary collaborations on digital data. This led me to get involved in a reflection on scientific programming in Python for data analysis, ranging from statistical methods to software development. It led me to publish a handbook in 2021 on Python for the social sciences[2]. It was a milestone in my journey to connect data, programming, and social sciences, which I’m fortunate enough to continue by joining GENES[3] and CREST.

- Why is it necessary and promising for a research laboratory like CREST to support researchers in the production and analysis of data?

Claire > In reality, the use of data is crucial for anchoring a research question. When discussing data, it’s imperative to consider the context in which they were acquired, including potential biases or quality. Some research questions may gather data through surveys or economic games. With the experimental laboratory (IPEL) here at CREST, I can produce experimental data. I can code the experiment, run the experiment online or here at CREST and help with the data management. By encouraging data production and analysis at CREST, we aim to improve the traceability and quality of the data used, thereby enhancing the resulting research.

Emilien > Data is consubstantial with scientific activity. But the word itself can be rather misleading, as it encompasses very different approaches and entities. When we talk about data these days, what we really mean is the proliferation of digital data and the IT tools required to process it. The acceleration and new constraints on research activities mean that new professionals need to intervene in the scientific work, whether to provide IT services, specialize in digital approaches to different disciplines, or build and deploy new software tools. While many researchers develop their own expertise in these approaches, in some cases this can be a heavy investment, both in terms of time and work. So, it’s worthwhile for a laboratory to facilitate both use and development, by helping with the more technical aspects.

- (Claire) How do you plan to proactively engage with researchers to identify potential areas where your skills could enhance their work?

I can assist researchers on two fronts:

- data production through testing economic models

- data analysis.

Both aspects are essential in the study of a research question. Therefore, I plan to disseminate my support to researchers through discussions and presentations.

First concerning the production of data, I can help the researchers or handle their production.

Second, helping with data analysis can save time for the researcher.

Furthermore, based on successful projects, I will redefine my offer and the methodology.

- (Emilien) How do you plan to initiate conversations with researchers to identify opportunities where computational social science methods can enhance their studies?

I have the feeling that the role of facilitator of research on digital data should intervene on two levels.

The first is to help answer questions already existing, and bring them to an operationalization strategy: indeed, researchers are specialists in their field, and see the arrival of new methods, whether using new data or software. The challenge is to assess feasibility and to map out available resources. So, they already have questions, and are looking for answers. It’s a good idea to have a door to knock on, to discuss and clarify these questions and needs as much as possible.

The second level is to open the space of possibilities, especially for research that’s just starting out. I think the best way to do this is to regularly present uses and tools in the form of continuous monitoring from a discovery angle, providing resources to take things further for those who want to dig deeper. This can be achieved by hosting events specifically devoted to digital methods, be they practice groups or dedicated seminars.

- Can you share an example of how you’ve successfully collaborated with researchers in the past to encourage them to explore new data-driven approaches?

Claire > During my doctoral studies, I collaborated with researchers. As my position at CREST marks the beginning of my post-doctoral career, this question is premature. I fully intend to develop collaborations at CREST and address this matter next year.

Emilien > My philosophy is always to start from needs. For example, I have often helped to transform existing data into a format suitable for specific software, for example in language processing or network analysis. I have also been involved in setting up data collection protocols (e.g. web scrapping). In some cases, when there was a need for a ready-made tool, I developed small applications that could easily be used for processing, thus facilitating the exploration of a dataset. More recently, I’m trying to help you choose the right strategies for processing textual data, because with the advent of language models, the range of existing tools has greatly expanded.

- How do you plan to make your expertise and the potential of data more accessible to researchers who may not have a strong background in data science?

Claire > In the realm of data production and analysis, my primary tools include: the otree package in Python, Stata, R, and data-lab servers. I aim to foster the growth of experiments at CREST. To achieve this, it’s crucial to offer training sessions on the usage of these tools and develop comprehensive documentation. Another approach would involve delivering simplified presentations on various projects conducted, elucidating the significance of conducting experiments at CREST.

Emilien > Before you can process data, you first need to want to do it, and then you need to find the means to do it. Digital tools are constantly evolving, especially in the context of open source. This means that you have to keep up to date. I’m convinced that there are two different aspects to this: the first is to enable everyone to have a basic culture of digital data processing; the second is to be able to help to identify relevant materials to progress to autonomy. I therefore believe it’s important to provide ongoing training in data processing practices, so that everyone can get started when they want, in particular through regular training courses on tools (programming basics, the command line, code versioning, notebooks, etc.), and to plan a personalized exchange on needs to guide people towards the most appropriate resources.

- Are there specific strategies you employ to encourage researchers to consider new or more recent options into their projects?

Claire > I rely on word of mouth and presentations within the economics cluster. I plan to interact more with the sociologist too as my work can be helpful for them. Knowing that an option exists is crucial in considering a change. I’ve already begun launching experiments, and I count on these successful examples to persuade those who might be interested in conducting experiments. Additionally, I’ve drafted procedures to illustrate how collaborations are structured and what researchers stand to gain from them.

Emilien > I don’t know if you’d call it a strategy, but my priority is to use the data already available to open new perspectives. There’s nothing like a little demo to open the possibilities and make people want to dig deeper. I’m also a fervent supporter of open source and open science, although I’m also convinced that more proprietary approaches are needed in certain cases. Whenever I can, I try to show examples of work that makes the most of the tools. Ideally, well-conducted data processing can lead to three types of use: scientific publications, datasets, and processing steps that enable reproducibility.

- Given the interdisciplinary nature of CREST, how do you see yourself collaborating with researchers from diverse fields to create new connections and exchanges around digital strategies to their projects?

Claire > I am keen to collaborate with all researchers interested in conducting experiments or data analysis. While these experiments may vary in form, fundamentally, they involve researchers with a research question and a desire to gather data to enhance their study. My work can be readily offered to various domains within CREST.

Emilien > I had some experience to talk to different communities, both inside and outside social sciences. I find that the central challenge is to find a common language while accepting that each field comes with its own questions and concepts. Such an exchange is facilitated to some extent by the existence of a common culture of digital tools coming from software engineering. However, this common language has its limits. Indeed, software and packages are often specific to particular disciplines or even currents. It is therefore necessary to take the time to identify the usual tools already in use, in order to propose an appropriate response. It’s impossible to do it without a minimum of discipline-specific knowledge. For example, some communities only use R, so it’s not possible to switch everything over to Python. On the other hand, it may be worthwhile to build a small program to perform the task in question, if it has to be done in Python. In other cases, specific software is used, and it’s not possible to replace it. So we have to find a way to make it works!

- Recently, who did you interact with and what were the conclusions and results? Were you satisfied with them?

Claire > Since I began, I’ve been collaborating with the GENES IT team to revive the experimental laboratory, which had been dormant for two years. Recently, we successfully conducted our first experiment. I engage daily with researchers to develop upcoming online experiments. Additionally, I’ve assisted in analyzing data collected in Japan. Each of these experiences has been positive and has enabled me to better understand the needs of CREST. This allows me to expand the range of possibilities I plan to offer to CREST researchers.

Emilien > Since my arrival, I’ve had the opportunity to immerse myself in some of CREST’s activities, particularly in computational social sciences. I still have a lot to discover, but I’ve already had rich exchanges with researchers and PhD students from the laboratory. Claire and I have also started to create an interface with the GENES IT team, which developing and providing tools adapted to research needs. I’m going to try to feed this exchange as much as possible, by circulating information and problems encountered. In the coming months, I’ll be continuing to observe and consult the laboratory’s researchers, to be able to build up a relevant training offer ranging from the fundamentals of digital tools (such as using Jupyter notebooks or command line interface) to more advanced approaches such as prototyping apps. I hope to be able to do this in collaboration with those who already use those tools, and as such I try to take every opportunity to exchange ideas. An important point will be the presentation of the Onyxia Datalab by the GENES IT team on May 2, at 1:30 pm, which will already provide a first opportunity to launch a discussion on tools and needs. We look forward to seeing you there.

[1] Center for Applied Mathematics, https://cmap.ip-paris.fr/en

[2] https://pur-editions.fr/product/7857/python-pour-les-shs

[3] Groupe des Ecoles Nationales d’Economie et Statistique

Sortie : “Ce qui échappe à l’intelligence artificielle” ouvrage dirigé par François Levin, Étienne Ollion, publié aux éditions Hermann

Paru le 27/03/2024

Collection : Philosophie, Politique et Économie

Thématique : Sciences humaines, philosophie, religion

Présentation :

L’intelligence artificielle est désormais partout, et son développement semble ne connaître aucune limite. Pas un mois ne se passe sans qu’une frontière que l’on pensait insurmontable ne soit allègrement franchie.

Plutôt que de se demander quelle sera la prochaine à être dépassée, ce livre interroge sur ce qui échappe, de manière profonde, à l’IA. Existe-t-il des bornes absolues, au-delà desquelles l’IA ne pourrait se rendre ? Des domaines de la vie qui lui seraient inaccessibles, comme l’amour, la colère, la pensée, la création, la rencontre, la signification ? Ou ces états sont-ils simplement des bornes contingentes, prêtes à être outrepassées grâce au flux de données et à la puissance des algorithmes d’apprentissage machine ? Mais peut-être que la distinction se joue encore ailleurs, non dans des domaines spécifiques, mais dans une certaine expérience du monde qui différerait fondamentalement entre l’humain et la machine.

Pour répondre à ces questions, et afin de comprendre pourquoi nous tenons tant à déterminer des limites à l’intelligence artificielle, ce livre rassemble des contributions interdisciplinaires : recourant à la philosophie, aux sciences sociales et à l’informatique, il tente de donner un sens au sentiment d’étrangeté que nous ressentons face au développement fulgurant des dispositifs intelligents.

Plus d’informations : https://www.editions-hermann.fr/livre/ce-qui-echappe-a-l-intelligence-artificielle-francois-levin

Le Cercle des Économistes : une interview de Pierre Boyer pour le podcast Génération Économie.

Dans ce nouvel épisode de Génération Économie, nous recevons Pierre Boyer, professeur à l’École polytechnique, directeur adjoint de l’Institut des Politiques publiques, nominé en 2023 au Prix du meilleur jeune économiste français, décerné par le Cercle des économistes et le journal Le Monde. Avec lui, Amédée, étudiant en master comptabilité – contrôle audit et membre du projet jeunesse du Cercle des Économiste.

25 mars 2024